ARiMI Learning Series:

AI FOR RISK PROFESSIONALS & LEADERS

This chapter is part of the “AI For Risk Professionals & Leaders” learning series, designed to help risk professionals and leaders engage with AI in ways that complement sound judgment, strategic thinking and ethical practice. Whether you are a certified expert or a curious practitioner, each chapter offers practical guidance to support the confident, clear and responsible use of AI tools in risk management

Clarifying Accountability

Building on Structured AI Integration

In earlier chapters, we’ve established how Artificial Intelligence is transforming risk management roles (Chapter 1) and identified critical governance gaps that arise from principles without practical implementation (Chapter 2). Most recently, Chapter 3 focused on everyday AI applications, stressing that the responsible use of AI enhances, rather than replaces, professional judgment.

This chapter addresses a crucial next step in structured AI adoption — accountability. As risk professionals increasingly rely on AI tools, clarity around accountability becomes paramount, particularly when AI outputs lead to unexpected, erroneous, or harmful outcomes.

Clarifying Accountability Amidst Complexity

AI’s sophisticated algorithms and automated processes can obscure the question of responsibility. A model going “rogue” — producing biased, incorrect, or harmful results — raises immediate concerns about who bears accountability. Without clear accountability frameworks, organisations risk significant operational, legal, and reputational impacts.

Core Dimensions of AI Accountability

To clearly navigate accountability, organisations need to consider four fundamental aspects:

Design and Development Accountability:

Developers must prioritise ethical AI creation, transparency, and robust oversight mechanisms.

Operational Accountability:

Risk professionals and users are responsible for ensuring the appropriate and disciplined application of AI tools.

Monitoring and Governance:

Ongoing performance evaluation, anomaly detection, and rapid response to identified AI issues.

Incident Remediation:

Swift, structured protocols for managing and correcting AI-driven failures.

What This Means for Risk Professionals

For certified professionals, this isn’t just conceptual material. It’s a practical shift in responsibility. AI accountability requires more than awareness — it demands action, structure, and judgment.

Risk professionals are expected not only to recognise governance gaps, but to lead conversations, initiate protocols, and uphold standards in how AI is applied.

To support this, ARiMI has designed structured tools, simulation-based learning, and role-specific resources that reinforce professional oversight in real-world scenarios. These will be introduced progressively as part of ARiMI’s expanding applied training modules.

Roles and Responsibilities in AI Accountability

The ARiMI accountability framework defines clear roles:

-

Developers and Vendors: Document AI functionalities, constraints, and intended uses transparently.

-

Risk Professionals and Users: Validate and contextualise AI outputs, ensuring alignment with organisational risk frameworks and ethical guidelines.

-

Executive and Board Leadership: Set ethical standards, provide strategic oversight, and ensure comprehensive governance of AI integration.

Each stakeholder group has defined responsibilities. Accountability is not diluted by AI; rather, it requires clearer delineation and proactive engagement.

Human Oversight: The Essential Factor

Human judgment remains indispensable. AI tools can amplify analytical capabilities but cannot replace ethical discernment, contextual awareness, and strategic oversight. Active human involvement ensures the early detection and rapid correction of anomalies or failures.

ARiMI emphasises structured oversight processes, ensuring human accountability is firmly embedded within all AI-supported activities.

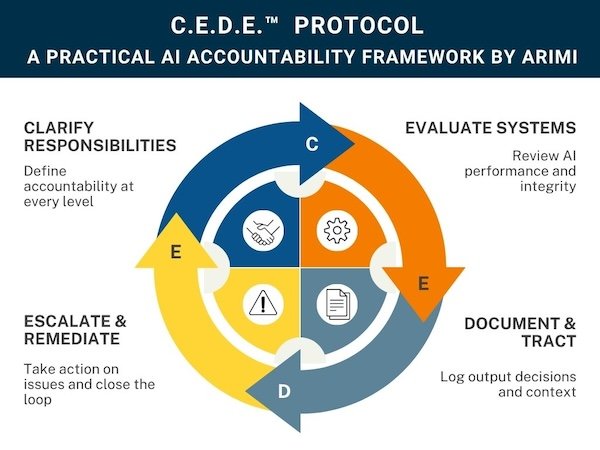

C.E.D.E.™ Protocol: Structured Oversight for AI Accountability

To practically manage accountability in AI systems, ARiMI introduces the C.E.D.E.™ Protocol, a four-part framework designed to provide structure, traceability, and operational clarity. This protocol supports professionals and organisations in anticipating challenges, maintaining discipline, and acting decisively when systems misfire.

Protocol 1: Clarify Responsibilities of Stakeholders

-

Assign clear, role-specific accountabilities across all levels, including Developers, Risk Professionals, Senior Management, Board Members, and operational or support teams involved in AI deployment or oversight

-

Define responsibilities based on actual decisions and actions, not just job titles or formal positions

-

Structure responsibilities to prompt timely engagement, ensuring the right individuals are equipped and expected to question, flag, or intervene when AI influences decisions

Protocol 2: Evaluate AI Systems

- Establish regular review schedules and criteria for AI tools in use.

- Ensure performance, data integrity, and decision alignment are actively monitored, not assumed.

- Designate responsible roles for triggering, conducting, and documenting evaluations as part of system oversight.

Protocol 3: Document and Track

-

Maintain structured records of AI-related decisions, anomalies, and interventions

-

Apply consistent documentation practices that support traceability, learning, and audit readiness

-

Ensure that decisions and actions can be clearly explained, professionally justified, and confidently reviewed when questioned

Protocol 4: Escalate and Remediate

- Define escalation thresholds, response triggers, and structured communication lines.

- Ensure teams act quickly when AI outputs deviate from norms or create risk exposure.

- Remediation actions should be logged, reviewed, and integrated into future practices to improve system reliability and organisational learning

- These protocols empower organisations to proactively anticipate and effectively respond to AI-related challenges, reinforcing trust and organisational resilience.

The C.E.D.E.™ Protocol provides a practical structure for embedding accountability into every stage of AI adoption and operation — ensuring that responses are timely, credible, transparent, and justifiable.

Practical Accountability Training

Effective accountability requires active preparation and training. ARiMI’s specialised accountability module includes:

-

Real-world case studies

-

Role-specific checklists

-

Interactive incident simulations

-

Guidelines for selecting, using, and documenting AI tools

Foundation of Responsible AI Adoption

Accountability is foundational. ARiMI ensures that risk professionals are thoroughly trained, certified, and supported in accountability practices, keeping AI use ethically grounded, transparent, and aligned with professional standards.